1. Introduction

Technological Pedagogical Content Knowledge (TPACK, Mishra & Koehler, 2006) is a well-established framework that has been extensively utilized to understand and assess teachers' integration of technology in their teaching practices. The TPACK framework, which combines technological, pedagogical, and content knowledge, combines skills that are essential for preparing teachers to effectively integrate digital tools into their pedagogical methodologies and practices. Due to its comprehensive nature, the TPACK model has been widely explored and validated in a variety of different educational contexts. In the present study, we present the results of exploratory factor analysis (EFA) for assessing the construct validity of the TPACK domains in an Italian context with in-training teachers. This analysis aims to confirm the scale's structural integrity and relevance in capturing the TPACK domains within this specific educational context. By doing so, the present study contributes to the broader body of research on TPACK. The results also offer insights relevant to the specific context of teacher training programmes in Italy, to assist in the promotion of educators in the digital era feeling confident in integrating new technology into their pedagogical practices. The insights into in-training teachers’ readiness have direct implications for the delivery of these training programmes, highlighting the importance of ensuring that they are not only grounded in theory but also practically relevant in equipping teachers for digitally enhanced educational contexts.

The TPACK framework has been established as a useful and robust tool for evaluating teachers' ability to integrate technology effectively into their pedagogical practices. In the context of rapid digital transformation, it is imperative that educators can successfully use innovative technologies within their teaching to create meaningful and engaging learning environments (Antonietti et al., 2022; Pérez, 2014). The effective integration of technology not only includes enhancing and improving traditional teaching methods but also fostering truly inclusive classrooms that cater to diverse learning needs. In the Italian context, while efforts have been made to comply with international regulations for inclusive education, there have been suggestions to refine current school practices in consideration of new approaches (Marsili et al., 2021). As innovative methods increasingly involve the use of assistive technologies, it is crucial to understand how teachers can effectively integrate these tools into their classrooms to address inclusion in educational settings. The validation of TPACK in this Italian context could serve as a model for other countries facing similar challenges, broadening the impact of the present study by offering insights into best practices for technology integration in education. By examining the construct validity of the TPACK framework using a large sample of 1723 future teachers, this study provides a comprehensive dataset that allows for a rich analysis of how well the framework captures the competencies required for contemporary teaching.

While the TPACK framework has been validated in various contexts, the diversity of findings highlights the need for further research in different educational settings. This study contributes to the discourse on the adaptability and validity of the TPACK framework, enhancing understanding within the specific context of Italy. The large sample size and the focus on future teachers offer a particular perspective that adds depth to the ongoing research on TPACK, also by allowing for further comparison against other countries and cultural contexts. The results not only address a gap in the literature by validating the TPACK framework within an Italian context but also have significant implications for policy making, teacher training programmes, and global discourse. By investigating how the TPACK model remains a relevant and effective tool for educators, this study contributes to the ongoing development and refinement of the framework, which in turn offers useful insights and support in the context of broader goals of preparing and training teachers to excel within the digital era.

2. Theoretical framework

2.1. TPACK

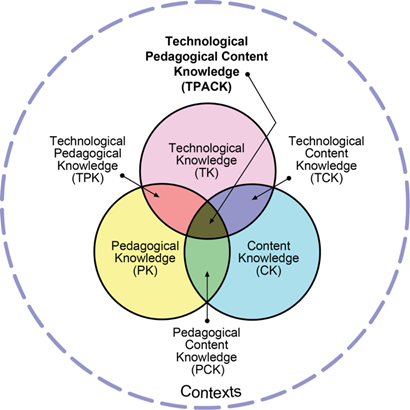

The TPACK framework (shown in Figure 1), derived from the notion of Pedagogical Content Knowledge (PCK) (Shulman, 1986), was developed following five years of experimental research by Keating and Evans (2001), Koehler and Mishra (2005; 2006). Today, it is recognized as the foundational knowledge base for teachers integrating technologies into education and professional development at all levels, including higher education (La Marca et al., 2018; Li et al., 2024). The components of this theoretical framework accurately depict the interconnection of the three principal domains of knowledge that teachers should possess: Content Knowledge (CK), Pedagogical Knowledge (PK), and Technological Knowledge (TK) (Thompson & Mishra, 2007). The instrument consists of 49 items. The three main domains (TK, PK, CK) interact and form four complex components (PCK, TCK, TPK, TPACK):

· CK – Content Knowledge is the knowledge of teaching subjects;

· PK – Pedagogical Knowledge is the knowledge of teaching and learning methods and processes;

· TK – Technological Knowledge is the knowledge of technologies;

· PCK – Pedagogical Content Knowledge, as theorized by Shulman (1986), is the knowledge of appropriate teaching methodologies and strategies for the subjects;

· TCK – Technological Content Knowledge is the understanding of which specific technologies are best suited for teaching a particular subject;

· TPK – Technological Pedagogical Knowledge is the understanding of how the use of certain technologies impacts teaching and learning processes;

· TPACK – Technological Pedagogical and Content Knowledge is the specialized form of teacher knowledge that comprehends the complex interactions between the three main forms of knowledge: technological, pedagogical, and content, and is able to master them in the specific contexts of their profession.

The seven scales allow for self-assessment of one’s competencies concerning the TPACK model, providing responses on a Likert scale.

Figure 1. TPACK model (reproduced by permission of the publisher, © tpack.org, 2012).

2.2. Prior explorations of TPACK domain construct validity

The domains of the TPACK model have been subject to assessment of their construct validity in a variety of different contexts. It is asserted that no one TPACK scale can be applied to all contexts, thus diverse explorations in different settings are needed (Koh et al., 2010). Previous works addressing the construct validity of TPACK domains have produced varying results. Önal (2016) conducted validity testing with 316 pre-service mathematics teachers in Turkey and identified 9 factors. In contrast, Rauf et al. (2021) revealed 6 factors from a sample of 100 ESL teachers. Luik et al. (2018) found 3 factors—Technology, Pedagogy, and Content Knowledge—among 413 pre-service teachers in Estonia, diverging from the 7 factors initially proposed by Schmidt et al. (2009). Bostancioğlu and Handley (2018) also identified 6 factors, while Shinas et al. (2013) identified 8 factors in a sample of 365 pre-service teachers. Lavidas et al. (2020) assessed 147 preschool teachers in Greece and found 6 domains.

Looking towards validation studies with larger sample sizes, Koh et al. (2010) identified 5 factors. Some studies have demonstrated support for the 7-factor model of TPACK (Chai et al., 2011; Lin et al., 2013; Pamuk et al., 2015; Prasojo et al., 2020). It is important to note that these studies did vary in the number of items from the TPACK model they used within their exploration of domains, which could have impacted the number of factors identified. Regarding Italian contexts, there are several reasons that the construct validation of TPACK domains is important, inclusive of the unique cultural context of the educational system which may impact how technology knowledge is integrated into teacher-training curriculums. Understanding the applicability and validity of TPACK within an Italian context is particularly important, given the aforementioned variations demonstrated across different cultural settings. Moreover, assessing these domains within a sample of future teachers of whom are amid training represents a crucially important demographic of teachers who, whilst learning new skills and proficiencies, can help us to understand the preparedness of future teachers to meet the evolving demands of the digital era. Whilst validation of the scale has been conducted in an Italian context of pre-service teachers (Magnanini et al., 2023), this study utilized a relatively smaller sample size of 284 pre-service support teachers, we contribute to the body of research on TPACK construct validity with a large sample size of 1723 in-training teachers. Whilst some studies demonstrate that the putative domains of TPACK are well-distinguished, other contexts suggest revisions may be necessary, thus an examination of these domains within an Italian context is necessary.

3. Methodology

3.1. Sample

The questionnaire was administered to future teachers enrolled at the University of Palermo, with a total sample size of 1723 subjects. Of the sample 85.43% identified as female, and 14.45% as male, subjects were between the ages of 22 and 63 years (M = 40.0, SD = 8.87).

3.2. Instrument description

The questionnaire was composed of the translated and adapted instrument that was developed and validated by Schmidt et al. (2009), inclusive of the 7 different sections of the TPACK model (Technological Knowledge, Content Knowledge, Pedagogical Knowledge, Technological Pedagogical Knowledge, Technological Content Knowledge, Pedagogical Content Knowledge, and Technological Pedagogical Content Knowledge) (Mishra & Koehler, 2006; 2009), the questionnaire consisted of a total of 49 items (demonstrated in Table 2), based on a 5-point Likert scale.

3.3. Data collection and statistical methods

The study used a convenience, non-random sampling technique in which responses were collected online, via Google Forms, over a period of approximately 2 months between May 2024 to June 2024. Participation was voluntary and consent was gained to process the results.

Data analysis was conducted using the following statistical methods:

1. Cronbach’s alpha was used to assess the internal consistency of the scales.

2. Exploratory factor analysis (EFA) was conducted on all 49 items, to assess the construct validity and underlying factor structure of the TPACK components when administered in the context of Italian future teachers, and to explore whether the total 49 items continued to belong to the 7 putative factors (TK, CK, PK, TPK, TCK, PCK, TPCK).

3.4. Data preparation

Once data was collected, the raw data underwent data preparation in Jamovi 2.2.5 software, including handling of missing values, data cleaning processes and assumption checks for EFA.

3.4.1. Data cleaning

Data from 144 participants was either incomplete or they had not consented to the use of their responses in the dissemination of results. This data was excluded, leaving 1723 responses for analysis. Proposed standards for a minimum number of participants varies, however, it is generally acknowledged that a minimum of 100 samples are needed, of which this study exceeds. Comrey and Lee (1992) assert that a study with 100 samples is weak, 200 is fair, 300 is good, 500 is very good, and 1000 samples or more is excellent, thus rendering our sample as considered excellent.

3.4.2. Assumption checks for exploratory factor analysis

Before conducting EFA, several assumptions were checked. These assessments ensured that the data met the necessary assumptions for conducting EFA. The strength of the methodology supports the validity and reliability of the following EFA results.

· Ordinal data treatment: Given the Likert scale responses, data were treated as ordinal.

· Linearity: A Spearman’s rho correlation matrix indicated intercorrelations among all items. It is suggested to ensure correlation coefficients are over 0.30 (Tabachnick and Fidell, 2001 cited in Taherdoost et al., 2022). As the majority of correlation coefficients were above 0.3, with a large portion also above 0.5, no items were excluded (Hair et al., 1995; 2006).

· Absence of perfect multicollinearity: Correlation coefficients did not approach ±1, thus meeting this assumption and ensuring no perfect multicollinearity.

· Factorability of the correlation matrix: Bartlett’s Test of Sphericity yielded a significant result (χ²(1176) = 89.844, p < .001), confirming that correlations between items were sufficient for factor analysis. The Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy was high (KMO = 0.984), indicating that the data were suitable for EFA.

4. Results

4.1. Internal consistency and reliability testing

To understand the reliability of the items within the TPACK survey, internal consistency using Cronbach’s alpha was tested. The combined TPACK scale demonstrated high internal consistency with a Cronbach’s alpha of 0.982. Table 1 demonstrates the mean Cronbach’s alpha values of each subscale, alongside descriptive statistics of mean and standard deviation. The Cronbach’s alpha values indicate that the subscales all have high internal consistency, with alpha values ranging from 0.923 to 0.967. Whilst generally values above 0.70 are considered acceptable, and values around 0.90 are considered very good (Tavakol & Dennick, 2011), some researchers have suggested that values closer to 1.0 could be too high and indicate some redundancy of the items within the scale (Streiner, 2003). Thus, some of our Cronbach’s alpha results are suggestive of such redundancy, which we explored further with exploratory factor analysis. Table 2 demonstrates the individual item-rest correlation for each of the 49 items used in the questionnaire, which ranges between 0.584 and 0.813, above the generally accepted minimum of 0.40.

|

Dimension |

No. Of Items |

Mean |

Standard Deviation |

Cronbach’s alpha |

|

TK |

22 |

3.72 |

0.696 |

0.967 |

|

CK |

6 |

3.94 |

0.679 |

0.923 |

|

PK |

6 |

3.93 |

0.688 |

0.952 |

|

PCK |

3 |

3.87 |

0.738 |

0.942 |

|

TCK |

3 |

3.87 |

0.772 |

0.931 |

|

TPK |

5 |

3.98 |

0.708 |

0.932 |

|

TPCK |

4 |

3.84 |

0.744 |

0.934 |

Table 1. Figure 2. Descriptive statistics of TPACK subscales.

|

Item |

Domain |

Item-rest correlation |

|

Technological Knowledge I know how to solve technical problems with the computer. |

TK1 |

0.584 |

|

I easily learn aspects related to new technologies. |

TK2 |

0.719 |

|

I keep up with new and important technologies. |

TK3 |

0.734 |

|

I often "tinker" with technology. |

TK4 |

0.670 |

|

I know many different technologies. |

TK5 |

0.744 |

|

I possess the technical skills I need to use technology. |

TK6 |

0.757 |

|

I have had sufficient opportunities to work with different technologies. |

TK7 |

0.718 |

|

I know basic hardware (e.g., CD-Rom, motherboard, RAM) and their functions. |

TK8 |

0.671 |

|

I know basic software (e.g., Windows, Media Player) and their functions. |

TK9 |

0.742 |

|

I follow the advancements of recent computer technologies. |

TK10 |

0.739 |

|

I use word processing programs (e.g., MS Word). |

TK11 |

0.726 |

|

I use spreadsheet programs (e.g., MS Excel). |

TK12 |

0.627 |

|

I communicate via the Internet (e.g., Email, Messenger, Twitter). |

TK13 |

0.699 |

|

I use image editing programs (e.g., Paint). |

TK14 |

0.723 |

|

I use presentation programs (e.g., MS Powerpoint). |

TK15 |

0.751 |

|

I am able to save data on digital media (e.g., CD, DVD, Dropbox, Drive...). |

TK16 |

0.737 |

|

I use specific software related to certain disciplines. |

TK17 |

0.740 |

|

I use the printer. |

TK18 |

0.676 |

|

I use the projector. |

TK19 |

0.636 |

|

I use the scanner. |

TK20 |

0.659 |

|

I use the digital camera. |

TK21 |

0.681 |

|

I use the Interactive Whiteboard (LIM). |

TK22 |

0.656 |

|

Content Knowledge I have sufficient knowledge regarding student inclusion. |

CK1 |

0.723 |

|

I am capable of thinking inclusively. |

CK2 |

0.702 |

|

I follow recent developments and applications in my preferred discipline. |

CK3 |

0.757 |

|

I recognize the experts in my teaching discipline. |

CK4 |

0.719 |

|

I keep up with updates in resources (e.g., books, journals) in my teaching area. |

CK5 |

0.706 |

|

I attend conferences and activities in my teaching area. |

CK6 |

0.623 |

|

Pedagogical Knowledge I know how to assess student performance in a classroom. |

PK1 |

0.745 |

|

I can adapt my teaching based on what students currently understand or do not understand. |

PK2 |

0.763 |

|

I can adjust my teaching style to different students. |

PK3 |

0.766 |

|

I can assess student learning in multiple ways. |

PK4 |

0.753 |

|

I can use a wide range of teaching methods in the classroom. |

PK5 |

0.764 |

|

I am familiar with the most common student understandings and misconceptions. |

PK6 |

0.708 |

|

Pedagogical Content Knowledge |

|

|

|

I know how to choose the most effective teaching methods related to my teaching disciplines. |

PCK1 |

0.770 |

|

I can develop appropriate assessment tools for my teaching disciplines. |

PCK2 |

0.777 |

|

I can prepare lessons for students with various learning styles. |

PCK3 |

0.772 |

|

Technological Content Knowledge |

|

|

|

I know the technologies that I can use to understand and implement student inclusion. |

TCK1 |

0.813 |

|

I design lessons that require the use of educational technologies. |

TCK2 |

0.801 |

|

I develop classroom activities and projects that involve the use of educational technologies. |

TCK3 |

0.798

|

|

Technological Pedagogical Knowledge I can choose technologies that support and enhance student learning during a lesson. |

TPK1 |

0.803 |

|

My teacher training has enabled me to reflect more deeply on how technology can influence the teaching approaches to be used in the classroom. |

TPK2 |

0.704 |

|

I critically reflect on the use of technology in the classroom. |

TPK3 |

0.715 |

|

I choose technologies that are most appropriate to my teaching style. |

TPK4 |

0.736 |

|

I evaluate the appropriateness of a new technology for teaching and learning. |

TPK5 |

0.774 |

|

Technological Pedagogical Content Knowledge |

|

|

|

I adequately integrate learning content, technologies, and teaching approaches. |

TPCK1 |

0.799 |

|

I select technologies that make the teaching of certain learning content more effective. |

TPCK2 |

0.798 |

|

I can select technologies to use in my classroom that enhance what I teach, how I teach, and what students learn. |

TPCK3 |

0.798 |

|

I can be a point of reference to help other teachers coordinate the use of disciplinary content, technologies, and teaching approaches at my school and/or within my territorial area. |

TPCK4 |

0.752 |

Table 2. Individual Item-rest correlations for TPACK items.

4.2. Exploratory factor analysis

To explore the construct validity and underlying factor structure of the TPACK and relationships between the putative domains, an exploratory factor analysis (EFA) was conducted on the data collected from the 1723 participants. We used exploratory factor analysis (EFA) as opposed to confirmatory factor analysis (CFA) as suggested by Shinas et al. (2013), evidence from empirical studies suggests CFA as a ‘less desirable technique’ than EFA for indicating the number of factors or domains (Shinas et al., 2013). Before conducting the EFA, the Kaiser-Meyer-Olkin (KMO) measure verified the sampling adequacy for the analysis, (KMO = 0.984), a value asserted by Kaiser (1974) to be considered meritorious. Bartlett’s test of sphericity also indicated that correlations between items were sufficiently large for EFA (χ²(1176) = 89.844, p < .001).

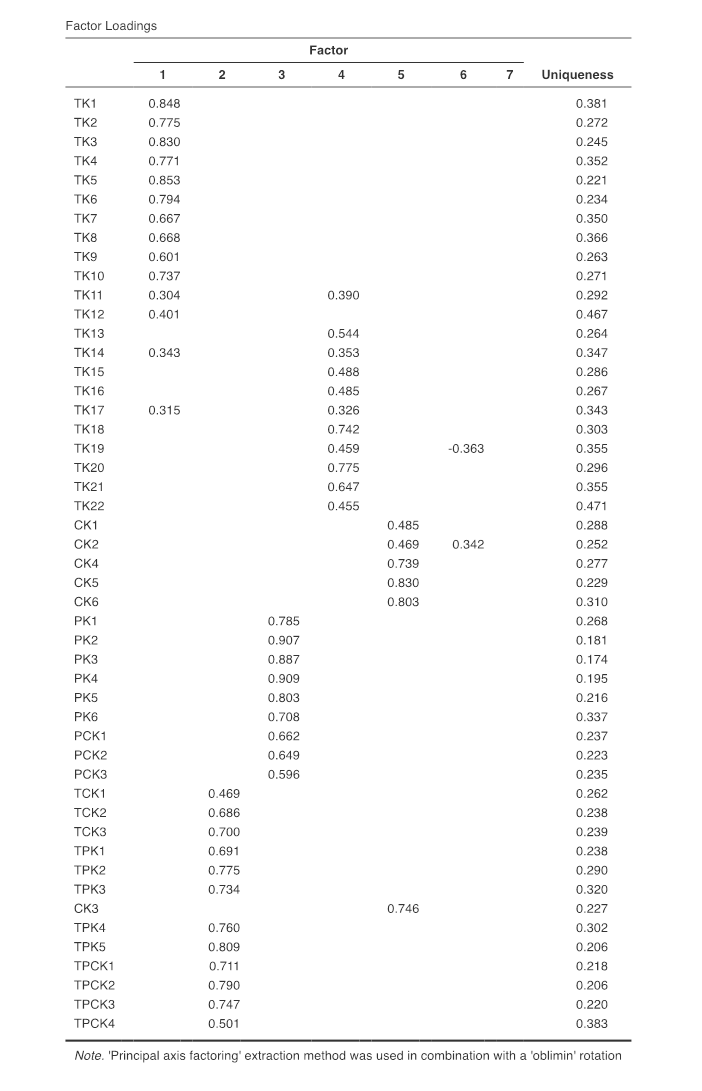

The principal axis factoring extraction method was used in combination with an Oblimin rotation. The principal axis extraction method was used as it is suggested by researchers to give the best results if data are non-normally distributed (Taherdoost, 2014) as well as for the determination of underlying factors related to a set of items (Burton & Mazerolle, 2011, cited in Taherdoost, 2014). Oblimin (an oblique rotation) was chosen as the extraction method because it was hypothesised that the latent factors within TPACK would be interrelated (Field, 2009) and oblique rotation allows the factors to correlate, which, as asserted by Costello & Osborne,

“in the social sciences we generally expect correlation among factors, since behaviour is rarely partitioned into nearly packaged units that function independently of one another” (Costello & Osborne, 2005, p. 3).

The number of factors was determined by a parallel analysis technique (Costello & Osborne, 2005; Field, 2009) which offered an empirically robust method for factor retention, and suggested a seven-factor solution, which scree test results also confirmed. None of the factor loadings was below 0.3, meaning no items required removal (Hair et al., 2006), resulting in the maintenance of all 49-items. Item loadings ranged from 0.304 to 0.909 and accounted for 71.9% of the variance, which meets the generally accepted minimum of 60% cumulative variance within the social sciences (Netemeyer, Bearden, & Sharma, 2003, Hair et al., 2006). However, it is important to note that the sixth and seventh factor had either limited or no significant loadings above 0.3, suggesting their potential lack of practical significance. Full factor loadings output can be seen in Figure B.

For testing the model fit, the chi-squared model test was not used due to the large sample size (N = 1,723), as it is known to be sensitive to larger samples (Bergh, 2015). Instead, we used alternative fit indices. The Tucker-Lewis Index (TLI) was 0.916, indicating good model fit (Finch, 2020), and the Root Mean Square Error of Approximation (RMSEA) was 0.061 (with a 90% confidence interval of 0.059 to 0.062), which also reveals acceptable model fit.

Figure 2: Factor loading full output.

5. Discussion

Although the exploratory factor analysis through a parallel analysis extraction initially suggested seven factors, consistent with the putative TPACK model, a closer examination of the factor loadings indicates that only five significant factors emerged in the context of Italian in-training teachers. These five factors include:

1. Two distinct factors for Technological Knowledge (TK).

2. A combined factor integrating Technological Content Knowledge (TCK), Technological Pedagogical Knowledge (TPK), and Technological Pedagogical Content Knowledge (TPCK) into a single, larger Technological Pedagogical and Content Knowledge subscale.

3. A combined factor that merges Pedagogical Knowledge (PK) and Pedagogical Content Knowledge (PCK) into one, larger Pedagogical Content Knowledge domain.

4. A distinct Content Knowledge (CK) domain, consistent with the original model domain.

This refined understanding suggests a more integrated and contextually relevant application of the TPACK framework for Italian in-training teachers. A commentary on each factor is as followed:

Factor 1 consists solely of 14 items relating to Technological Knowledge (TK) which although is less than the 22 items suggested by the putative TPACK domains, indicates that this domain is strongly indicative of technological aspects of the TPACK model. Factor 4 consists of the 8 remaining items from the Technological Knowledge domain, which demonstrates a splitting of the putative TK domain. Upon closer inspection, the items from TK in Factor 1 relate more to “I know” statements, whereas the items from TK in Factor 4 relate more to “I use” statements, suggesting a disconnect between what the in-training teachers feel they know in theory, and what they use on a practical level. For instance, Factor 1 (consisting of the “I know” statements) is strongly characterised by TK5 (loading = 0.853) “I know many different technologies”. Whereas Factor 4 (consisting of the “I use” statements) is strongly characterised by TK18 (loading = 0.742) “I use the printer”, TK20 (loading = 0.775) “I use the scanner” and TK21 (loading = 0.647) “I use the digital camera”. This could be due simply to the participants not utilising these technologies often due to a lack of necessity, or this could be representative of what is actually a lack of confidence in the practical use of such technologies, indicating potential areas to focus on within training. As well as this, there are some TK items from Factor 1 which also load onto Factor 4. This suggests a potential overlap or ambiguity in how technological knowledge is represented in the dataset. The relatively low loadings (ranging from 0.304 – 0.353) on the items which overlap on both Factor 1 and Factor 4 suggest a need for further clarity or refinement in how these items are categorized.

Factor 2 combines items from Technological Content Knowledge (TCK), Technological Pedagogical Knowledge (TPK), and Technological Pedagogical Content Knowledge (TPCK) into a single factor. This amalgamation suggests a strong interconnection among these items based on their shared content and the combination into one factor suggests that there may not be such a distinction between the two putative domains as suggested by original TPACK subscales. It is strongly characterised by TPK5 (loading = 0.809) “I evaluate the appropriateness of a new technology for teaching and learning” and TPCK2 (0.790) “I select technologies that make the teaching of certain learning content more effective”, both of which pertain to the ability to choose and make decisions about appropriate technologies for enhanced effectiveness in teaching contexts. This is suggestive of Factor 2 relating to decision-making surrounding technologies.

Factor 3 consists of all items from Pedagogical Knowledge (PK) and all items of Pedagogical Content Knowledge (PCK), again suggesting that there may not be such a distinction between the putative domains which are tied together by pedagogical knowledge as their underlying connecting factor. This finding is in line with the findings of two of the previously mentioned studies by Koh et al. (2010) and Luik et al. (2017). The factor is most strongly characterised by high-loading items such as PK2 (loading = 0.907), PK4 (loading = 0.909) and PK5 (loading = 0.803) which all pertain to how respondents perceive their adaptability in teaching and assessment methods.

Factor 5 consists of all items from the Content Knowledge domain, suggesting this as a clearly defined factor, providing evidence for the construct validity of this putative domain within TPACK, distinct from other factors.

Factor 6 is characterized by only two items. One item negatively loads from Technological Knowledge, and the other has a low loading (0.342) also observed in the Content Knowledge factor. The minimal representation and low loadings in Factor 6 question its significance or necessity within the model, supporting the notion and interpretation of our suggestion of only 5 significant factors. Furthermore, Factor 7 is notable for having no loadings at all. This absence suggests that none of the survey items included in Factor 7 contribute significantly enough to the seventh factor, which again supports the notion of our five reported domains.

Our condensing of the number of domains echoes findings from previous research (Luik et al., 2018; Bostancioğlu and Handley, 2018; Rauf et al., 2018; Lavidas et al., 2020; Koh et al., 2010). As mentioned previously in this study, the construct validity of TPACK is dependent on context and setting, thus the generalizability of these results will be limited by nature. However, regarding the applicability to Italian and pre-service teacher training contexts, our results due to our large sample size offer valuable insights by contributing areas for consideration or potential revision as well as validation. Future research could employ confirmatory factor analysis (CFA) to validate these findings.

6. Conclusions and future recommendations

The exploratory factor analysis (EFA) conducted in this study offers insights into the construct validity of the Technological Pedagogical Content Knowledge (TPACK) in the context of a large sample of Italian in-training teachers. Though EFA loading output initially suggested seven factors as per the TPACK model, our interpretation refined these instead into five significant factors, a condensing of domains which has also occurred within previous explorations of TPACK construct validity. Whilst acknowledging the context-specific nature of findings, we contribute to future uses of the TPACK framework within the context of Italian teacher training contexts. The distinction and division of the Technological Knowledge domain, in particular, highlighted nuances in how in-training teachers perceive and utilize technological knowledge, offering potential guidelines and areas for curriculum refinement or targeted training. The results also echo previous works which highlight the need for the ongoing refinement of the TPACK framework in order to remain relevant and up-to-date within the ever-evolving area of education and technology.

References

Antonietti, C., Cattaneo, A., & Amenduni, F. (2022). Can teachers’ digital competence influence technology acceptance in vocational education? Computers in Human Behavior, 132, 107266. https://doi.org/10.1016/j.chb.2022.107266

Bergh, D. (2015). Chi-Squared Test of Fit and Sample Size-A Comparison between a Random Sample Approach and a Chi-Square Value Adjustment Method. Journal of Applied Measurement, 16(2), 204–217.

Bostancioglu, A., & Handley, Z. L. (2018). Developing and validating a questionnaire for evaluating the EFL ‘Total PACKage’:Technological Pedagogical Content Knowledge (TPACK) for English as a Foreign Language (EFL). Computer Assisted Language Learning, 572–598.

Burton, L. J., & Mazerolle, S. M. (2011). Survey Instrument Validity Part I: Principles of Survey Instrument Development and Validation in Athletic Training Education Research. Athletic Training Education Journal, 6(1), 27–35. https://doi.org/10.4085/1947-380X-6.1.27

Chai, C., Koh, J., & Tsai, C.-C. (2011). Exploring the factor structure of the constructs of technological, pedagogical, content knowledge (TPACK). Asia-Pacific Education Researcher, 20, 595–603.

Comrey, A. L., & Lee, H. B. (1992). A First Course in Factor Analysis. Hillsdale, NJ: Lawrence Eribaum Associates.

Costello, A. B., & Osborne, J. (2005). Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Practical Assessment, Research, and Evaluation, 10(1), Article 1. https://doi.org/10.7275/jyj1-4868

Field, A. (2009). Discovering Statistics Using SPSS: Introducing Statistical Method (3rd ed.). Thousand Oaks, CA: Sage Publications.

Finch, W. H. (2020). Using Fit Statistic Differences to Determine the Optimal Number of Factors to Retain in an Exploratory Factor Analysis. Educational and Psychological Measurement, 80(2), 217–241. https://doi.org/10.1177/0013164419865769

Hair, J. F., Anderson, R. E., Tatham, R. L., & Black, W. C. (1995). Multivariate data analysis. Englewood Cliffs, NJ: Prentice-Hall.

Hair, J.F., Black, W.C., Babin, B.J., Anderson, R.E. & Tatham, R.L. (2006). Multivariate Data Analysis (6th Edition). Upper Saddle River: Pearson Prentice Hall, New Jersey.

Kaiser, Henry F. 1974. “An Index of Factorial Simplicity.” Psychometrika, 39(1), 31–36.

Keating, T., & Evans, E. (2001). Three computers in the back of the classroom: Pre-service teachers’ conceptions of technology integration. In R. Carlsen, N. Davis, J. Price, R. Weber & D. Willis (eds.), Society for Information Technology and Teacher Education Annual (pp. 1671-1676). Norfolk, VA: Association for the Advancement of Computing in Education.

Koehler, M.J., & Mishra, P. (2005). What happens when teachers design educational technology? The development of technological pedagogical content knowledge. Journal of Educational Computing Research, 32(2), 131–152.

Koh, J. h. l., Chai, C. s., & Tsai, C. c. (2010). Examining the technological pedagogical content knowledge of Singapore pre-service teachers with a large-scale survey. Journal of Computer Assisted Learning, 26(6), 563–573. https://doi.org/10.1111/j.1365-2729.2010.00372.x

La Marca, A., Gülbay, E., & Di Martino, V. (2018). Learning strategies, decision-making styles and conscious use of technologies in initial teacher education. Form@ re-Open Journal per la formazione in rete, 18(1), 150-164.

Lavidas, K., Katsidima, M.-A., Theodoratou, S., Komis, V., & Nikolopoulou, K. (2021). Preschool teachers’ perceptions about TPACK in Greek educational context. Journal of Computers in Education, 8(3), 395–410. https://doi.org/10.1007/s40692-021-00184-x

Li, M., Vale, C., Tan, H., & Blannin, J. (2024). A systematic review of TPACK research in primary mathematics education. Mathematics Education Research Journal, 1-31.

Lin, T.-C., Tsai, C.-C., Chai, C. S., & Lee, M.-H. (2013). Identifying Science Teachers’ Perceptions of Technological Pedagogical and Content Knowledge (TPACK). Journal of Science Education and Technology, 22(3), 325–336. https://doi.org/10.1007/s10956-012-9396-6

Luik, P., Taimalu, M., & Suviste, R. (2018). Perceptions of technological, pedagogical and content knowledge (TPACK) among pre-service teachers in Estonia. Education and Information Technologies, 23(2), 741–755. https://doi.org/10.1007/s10639-017-9633-y

Magnanini, A., Morelli, G., & Sanchez Utge, M. (2023). Validation of the TPACK-IT scale for pre-service teacher trainees. Italian Journal of Health Education, Sport and Inclusive Didactics, 7(1). https://doi.org/10.32043/gsd.v7i1.794

Mishra, P., & Koehler, M.J. (2006). Technological Pedagogical Content Knowledge: A framework for integrating technology in teacher knowledge. Teachers College Record, 108(6), 1017–1054.

Pérez, A. G. (2014). Characterization of Inclusive Practices in Schools with Education Technology. Procedia - Social and Behavioral Sciences, 132, 357–363. https://doi.org/10.1016/j.sbspro.2014.04.322

Shulman, L.S. (1986). Those who understand: knowledge growth in teaching. Educational Researcher, 15(2), 4–14.

Netemeyer, R., Bearden, W., & Sharma, S. (2003). Scaling Procedures. Issues and Applications. California: Sage. https://doi.org/10.4135/9781412985772

Önal, N. (2016). Development, Validity and Reliability of TPACK Scale with Pre-Service Mathematics Teachers. International Online Journal of Educational Sciences, 8. https://doi.org/10.15345/iojes.2016.02.009

Pamuk, S., Ergun, M., Cakir, R., Yilmaz, H. B., & Ayas, C. (2015). Exploring relationships among TPACK components and development of the TPACK instrument. Education and Information Technologies, 20(2), 241–263. https://doi.org/10.1007/s10639-013-9278-4

Prasojo, L., Habibi, A., Mukminin, A., & Mohd Yaakob, M. F. (2020). Domains of Technological Pedagogical and Content Knowledge: Factor Analysis of Indonesian In-Service EFL Teachers. International Journal of Instruction, 13, 1–16. https://doi.org/10.29333/iji.2020.13437a

Rauf, A., Swanto, S., & Salam, S. (2021). Exploratory Factor Analysis of Tpack in the Context of ESL Secondary School Teachers in Sabah. International Journal of Education, Psychology and Counseling, 6, 137–146. https://doi.org/10.35631/IJEPC.6380012

Schmidt, D. A., Baran, E., Thompson, A. D., Mishra, P., Koehler, M. J., & Shin, T. S. (2009). Technological Pedagogical Content Knowledge (TPACK): The Development and Validation of an Assessment Instrument for Preservice Teachers. Journal of Research on Technology in Education, 42(2).

Shinas, V. H., Yilmaz-Ozden, S., Mouza, C., Karchmer-Klein, R., & Glutting, J. J. (2013). Examining Domains of Technological Pedagogical Content Knowledge Using Factor Analysis. Journal of Research on Technology in Education, 45(4), 339–360. https://doi.org/10.1080/15391523.2013.10782609

Streiner, D. L. (2003). Starting at the Beginning: An Introduction to Coefficient Alpha and Internal Consistency. Journal of Personality Assessment, 80(1), 99–103. https://doi.org/10.1207/S15327752JPA8001_18

Tabachnick, B.G. and Fidell, L.S. (2001) Using Multivariate Statistics. 4th Edition, Allyn and Bacon, Boston.

Taherdoost, H., Sahibuddin, S., & Jalaliyoon, N. (2014). Exploratory Factor Analysis; Concepts and Theory. In J. Balicki (Ed.), Advances in Applied and Pure Mathematics (Vol. 27, pp. 375–382). WSEAS. https://hal.science/hal-02557344

Tavakol, M., & Dennick, R. (2011). Making sense of Cronbach’s alpha. International Journal of Medical Education, 2, 53–55. https://doi.org/10.5116/ijme.4dfb.8dfd

Tpack.org. (2012). Using the TPACK Image. Tpack.Org. https://tpack.org/tpack-image/

Zeynivandnezhad, F., Rashed, F., & Kanooni, A. (2019). Exploratory Factor Analysis for TPACK among Mathematics Teachers: Why, What and How. Anatolian Journal of Education, 4(1), 59–76.