M. Elena Rodríguez

Faculty of Computer Science, Multimedia and Telecommunications; Universitat Oberta de Catalunya, Spain – mrodriguezgo@uoc.edu

https://orcid.org/0000-0002-8698-4615

Juliana E. Raffaghelli

FISPPA - Faculty of Philosophy, Sociology, Education and Applied

Psychology; University of Padua, Italy – juliana.raffaghelli@unipd.it

https://orcid.org/0000-0002-8753-6478

David Bañeres

Faculty of Computer Science, Multimedia and Telecommunications; Universitat Oberta de Catalunya, Spain – dbaneres@uoc.edu

https://orcid.org/0000-0002-0380-1319

Ana Elena Guerrero-Roldán

Faculty of Computer Science, Multimedia and Telecommunications; Universitat Oberta de Catalunya, Spain – aguerreror@uoc.edu

https://orcid.org/0000-0001-7073-7233

Francesca Crudele

FISPPA - Faculty of Philosophy, Sociology, Education and Applied

Psychology; University of Padua, Italy – francesca.crudele@phd.unipd.it

https://orcid.org/0000-0003-1598-2791

ABSTRACT

AI-powered educational tools (AIEd)

include early warning systems (EWS) to identify at-risk undergraduates,

offering personalized assistance. Revealing students’

subjective experiences with EWS could

contribute to a deeper understanding of what it means to engage with AI in

areas of human life, like teaching and learning. Our

investigation hence explored students’ subjective experiences with EWS,

characterizing them according to students’ profiles, self-efficacy, prior

experience, and perspective on data ethics. The

results show that students, largely senior workers with strong academic

self-efficacy, had limited experience with this method and minimal

expectations. But, using the EWS inspired meaningful reflections. Nonetheless, a comparison between

the Computer Science and Economics disciplines

demonstrated stronger trust and expectation regarding the system and AI for the former. The study

emphasized the importance of helping students’

additional experiences and comprehension while embracing AI systems in

education to ensure the quality, relevance, and fairness of their educational

experience overall.

Gli strumenti educativi alimentati dall’intelligenza

artificiale (AIEd) includono sistemi di allerta

precoce (EWS) per identificare gli studenti universitari a rischio, offrendo

assistenza personalizzata. Rivelare le esperienze soggettive degli studenti con

gli EWS potrebbe contribuire a una comprensione più profonda di cosa significhi

interagire con l’IA in aree della vita umana quali l’insegnamento e l’apprendimento.

La nostra indagine ha quindi esplorato le esperienze soggettive degli studenti

con gli EWS, caratterizzandole secondo i profili degli studenti, l’autoefficacia,

l’esperienza pregressa e la prospettiva sull’etica dei dati. I risultati

mostrano che gli studenti, per lo più lavoratori senior con forte autoefficacia

accademica, avevano esperienze limitate con questo metodo e aspettative minime.

Ciononostante, l’utilizzo degli EWS ha ispirato riflessioni significative.

Nonostante ciò, un confronto tra le discipline di Informatica ed Economia ha

dimostrato una maggiore fiducia e aspettativa riguardo al sistema e all’IA per

la prima. Lo studio ha sottolineato l’importanza di aiutare gli studenti a

maturare ulteriori esperienze e comprensioni mentre si avvalgono dei sistemi AI

nell’educazione per garantire la qualità, la rilevanza e l’equità della loro

esperienza educativa complessiva.

KEYWORDS

Students’ Experiences, Artificial Intelligence, Early Warning System, Higher Education, Thematic Analysis

Esperienze degli studenti, Intelligenza Artificiale,

Sistema di Allerta Precoce, Alta formazione, Analisi tematica

CONFLICTS OF

INTEREST

The Authors declare no conflicts of interest.

RECEIVED

January 11, 2024

ACCEPTED

April 23, 2024

1. Introduction

Artificial Intelligence (AI) is increasingly transforming

various industries and services (Makridakis,

2017), including education. AI is a tool that allegedly

has the potential to address some of the biggest challenges in education today,

promoting innovative teaching and learning

practices, and accelerating progress (Popenici & Kerr, 2017; Zawacki-Richter et al., 2019). Though AI systems have

been studied in education in the last 30 years, the general audience’s

attention has been particularly captured by what was called “Generative AI”

(GenAI). Since November 2022, emerging technologies such as ChatGPT, created by

the research company OpenAI (OpenAI, 2022), and Bard, developed by Google

(Pichai, 2023) were launched and followed by thousands of applications after a

year. Both are trained on “large language models”, i.e., to predict the

probability of various words in a given text to appear

together, these tools are AI-based natural language processing and facilitate

human-like conversations with chatbots, providing an exciting user experience (Jalalov, 2023; Lund & Wang, 2023).

A recent study by Tlili et al. (2023) focused on

ChatGPT highlighted the necessity for AI-based teaching philosophy in

Higher Education (HE), emphasizing the importance of enhancing AI literacy

skills in the context of training for 21st-century

capabilities (Ng et al., 2023). In such a

context, it is relevant for the students to understand that several

AI-driven tools might concur to shape their user experience in different ways.

Indeed, several Educational

AI (EdAI) tools before GenAI, particularly

based on local developments by SMEs, might enhance students’ experience and

learning outcomes by automating both routine administrative tasks and promoting

judgments based on data (Pedró et al., 2019). From the students’ perspective, EdAI

could support their independent learning, self-efficacy,

self-regulation, and awareness of their progress (Jivet

et al., 2020). Nonetheless, the lack of understanding of how AI-based

tools work, and which are the digital infrastructures supporting their

existence might create unrealistic expectations or unawareness about

unauthorised data capturing with its consequent manipulation. A key approach to

AI literacy is based indeed on transparency and the users’ agency to decide at

which point the system should be stopped for it is not serving educational and

overall human purposes while working (Floridi, 2023)

In connection with the perspective above, several

works (Pedró et al., 2019; Rienties

et al., 2018; Scherer & Teo, 2019; Valle et al., 2021) point out

that evaluating users’ perceptions and opinions about EdAI

tools is crucial. A study by Raffaghelli et al.

(2022) investigated factors connected to students’ acceptance (a form of

opinion) of an AI-driven system based on a predictive model capable of early

detection of students at risk of failing or dropping out, or EWS (Bañeres et al., 2020; Bañeres et al., 2021; Raffaghelli,

Rodríguez, et al., 2022). The study investigated how acceptance changed over

time (Greenland & Moore, 2022). Following

a pre-usage and post-usage experimental design based on the Unified Theory of

Acceptance and Use of Technology (UTAUT) model (Venkatesh et al., 2003),

authors explored the factors influencing EWS acceptance included

perceived usefulness, expected effort, and facilitating conditions. Social influence was the least relevant factor.

Interestingly, our findings also revealed a disconfirmation effect (Bhattacherjee & Premkumar, 2004) in accepting the AI-driven

system: a difference between expectations about using

such a technology and the post-usage

experience. Despite high satisfaction levels after using the system, Raffaghelli, Rodríguez, et al.

(2022) suggested that even though AI is a trendy topic in education, careful

analysis of expectations in authentic settings is needed. However, the study’s quantitative nature could not fully

understand students’ expectations, beliefs, motivations, and experiences with a specific AI-driven system.

The current study

investigates students’ acceptance of an AI-driven system (EWS) based on thematic discourse and content analysis. Such

models can warn students and teachers about at-risk situations through

dashboards or panel visualisations based on local data generated by the LMS

adopted by the university (Liz-Domínguez et al., 2019). Most importantly, an

EWS may also provide intervention mechanisms, helping teachers provide early

personalised guidance and follow-up with the students to amend possible issues. combined with their knowledge impacts their acceptance of

such developments in HE. The effectiveness of an EWS lies not only in

its technical performance but also in students’ and teachers’ experiences,

opinions, and perception of usefulness and relevance for their learning and

teaching practices. This aligns with the

debate on AI literacy for understanding the EWS operation and technological

infrastructure might lead the students to act agentically

relating to an AI-driven technology.

2. Background

The cross-disciplinary research field on EdAI

tools has multiple perspectives: social scientists focus on human aspects,

potential benefits, and ethical concerns, (Prinsloo, 2019; Selwyn, 2019; Tzimas

& Demetriadis, 2021) and, in contrast, STEM

researchers focus on technical aspects like usability and user experience (UX)

issues (Bodily & Verbert, 2017; Hu et al., 2014; Rienties et al., 2018). Qualitative studies on stakeholders’

experiences are scarce and recent, and there is a need for better understanding

(Ghotbi et al., 2022; Kim et al., 2019;

Zawacki-Richter et al., 2019). Below, we reviewed the literature about general

experiences relating to AI in education, then experiences in EWS as a

particular case, primarily focused on the student’s perspective.

Much of the literature agrees that perceived usefulness and ease of use

are positively related to EdAI tool usage intention,

both in teachers (Chocarro et al., 2021; Rienties et al., 2018) and in students (Chen et al., 2021; Chikobava & Romeike, 2021;

Kim et al., 2020). Gado et al., (2022) found that perceived knowledge of AI,

especially in female students, is crucial for effective AI training approaches

in various disciplines. Many students considered AI a diffuse technology,

suggesting the need to design AI training approaches in the psychology

curricula. This need has also been emphasised in other disciplines, such as

medicine (Bisdas et al., 2021), business (Xu &

Babaian, 2021), and even computer engineering (Bogina

et al., 2022). Also connected to knowledge about AI, Bochniarz

et al. (2022) concluded that students’ conceptualization of AI can

significantly affect their attitude and distrust, which could be

disproportionate due to science fiction, entertainment, and mass media (Kerr et

al., 2020). Students and teachers have also reported ethical concerns about

data privacy and control as well as how AI algorithms work (Freitas &

Salgado, 2020; Bisdas et al., 2021; Holmes et al.,

2022).

Exposure to EdAI tools, such as conversational

agents (Guggemos et al., 2020), can change students’ perceptions, with

adaptiveness being essential for use intention. Practical experiences regarding

EdAI tools can also change students’ perceptions. For

example, van Brummelen et al. (2021) showed an intervention where middle and

high school students built their own conversational agents. Results showed a

change in the students’ perceptions (higher acceptance) after the intervention.

Recent qualitative studies have explored middle school students’

conceptualization of AI (Demir & Güraksın, 2022)

and HE student’s attitudes and moral perceptions towards AI (Ghotbi et al., 2022), finding positive and negative

aspects. Similarly, Qin et al. (2020) investigated the factors influencing

trust in EdAI systems (mainly conversational agents)

in middle and high school from several perspectives, including students,

teachers, and parents. The authors identified trust risk factors as technology,

context, and individual-related. Students and parents have expectations about

the EdAI systems’ potential to eliminate

discrimination and injustice in education, which aligns with other studies. Seo

et al. (2021) found that negative perceptions often come from positive aspects

of AI, primarily due to unrealistic expectations and misunderstandings. The

authors examined the impact of AI systems on student-teacher interaction in

online HE, finding that while AI could enhance communication, concerns about

responsibility, agency, and surveillance arose.

In the specific case of EWS, machine learning is applied to recognise

patterns, make predictions and apply the discovered knowledge to detect

students at risk of failing or dropping out by embedding predictive models

(Casey & Azcona, 2017; Kabathova & Drlik,

2021; Xing et al., 2016). Predictions are delivered to the students through a

traffic light signal and personalised automatic messages. When models are

integrated within an EWS, students’ retention and performance usually improve,

and the students tend to express positive opinions (Arnold & Pistilli,

2012; Krumm et al., 2014; Ortigosa et al., 2019). However, teachers reported

excessive dependence among students instead of developing autonomous learning

skills and a lack of teacher-oriented best practices for using the EWS (Krumm

et al., 2014; Plak et al., 2022). Qualitative studies

offer findings regarding the dashboards provided by an EWS to detect students

at risk of failing through semi-structured interviews conducted in small focus

groups. These studies suggest that the students would prefer to see progress in

percentages accompanied by motivating comments, as the rating scale was too

simple (Akhtar, 2017). Also, students flagged as bad could be demotivated and

their confidence could decrease, although they agreed about the importance of

knowing performance status (Hu et al., 2014). Also, relevant, most papers focus

on academic staff opinions (Gutiérrez et al., 2020; Krumm et al., 2014; Plak et al., 2022), indicating that more research is needed

to capture students’ perceptions to understand better the impact on their behavior, achievement, and skills (Bodily & Verbert, 2017).

Also tellingly, research on EdAI tools has

primarily focused on developing them, conducting short experimental

applications, and conducting surveys that do not relate to real classroom

settings (Ferguson et al., 2016). These studies often rely on minimal exposure

to the technology (Bochniarz et al., 2022; Gado et

al., 2022; Seo et al., 2021) and focus on development and testing (Hu et al.,

2014; Rienties et al., 2018), rather than the broader

context of daily teaching and learning (Bodily & Verbert,

2017). In this context, critics argue that speculative and conceptual

propositions for future EdAIs generate polarisation,

leading to excessive enthusiasm or pessimism (Buckingham-Shum, 2019).

Speculative studies cannot significantly contribute to data privacy and

negative opinions about AI systems in society and education (Prinsloo et al.,

2022). Contributions to exploring the subjective experience and understanding

of EdAI tools can help promote a more balanced vision

of human-computer interaction in education.

3. Methodological approach

From the background analysis, we considered two main research questions:

●

RQ1: How do the students experience AI

tools as an EWS, given their profiles, their self-efficacy when studying, their

prior experience of AI tools overall, and their opinion on data ethics?

●

RQ2: Are there differences between the

students given their fields of study? Particularly: if a student is closer to

the field of computer science relating to the development of AI tools, will the

student be more confident and willing to adopt the tool?

Our study sets its methodological basis on the phenomenological

understanding of human experience applied to human-computer interaction. We

refer to the Encyclopedia of Human-Computer

Interaction, which highlights that “our primary stance towards the world is

more like a pragmatic engagement with it than like a detached observation of it

[…] we are [...] inclined to grab things and use them” (Gallagher, 2014, Ch.

28). Therefore, experience means action and giving meaning within the context

of life, things, and the technology surrounding us. In this regard, EdAI tools do not pre-exist but need to be experienced,

used, and understood, for “intentional, temporal and lived experience [...] is

directly relevant to design issues”. Consequently, the development of EdAI tools requires a deep understanding of the human

experience in their usage.

3.1. Context of the study

Blinded university is a blinded nationality fully online university with

relevant national and international trajectory. All educational activity occurs

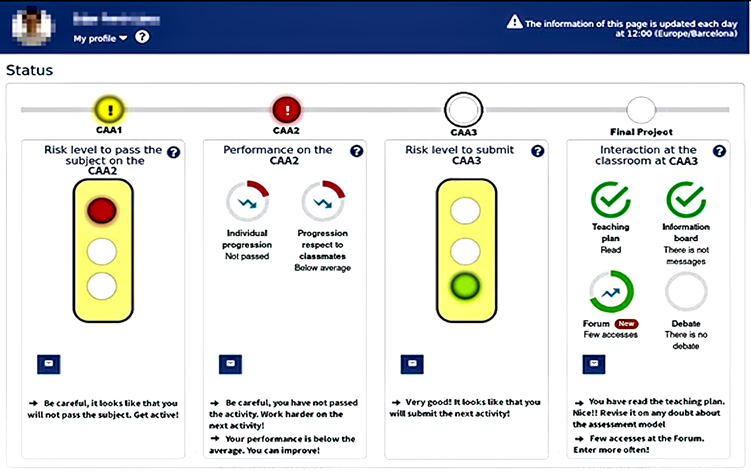

within its virtual campus, which is based on a proprietary platform. Figure 1 displays the dashboards

to which students and teachers have access to follow the progress of what is

called Continuous Assessment of Activities (CAAs). To learn more about CAAs and

the outputs of our EWS see “Supplementary Materials”.

Figure 1. The student dashboard (status information). Note: AI-enhanced by the Editor to compensate for the inability to take high-resolution screenshots on the visualizing device (status of image quality not attributable to the Authors).

3.2. Data collection

method and participants

Blinded was tested during an initial survey carried out in the academic

year 2020-21 across three different degrees (Computer Science or CS; Business

Administration or BA; and Marketing and Market Research or MR). Out of 918

responses, 65 students were available to be interviewed. The interviews were

held at the end of the course when only 51 students were available from the

initial group due to dropout issues. Hence, we selected 25 students

representing the overall characteristics of CS, BA, and MR courses, leading to

13 cases from CS, 10 from BA, and two from MR. We got replies from 24 of these

students, and three did not attend the interview. Twenty-one students were

finally interviewed. They were self-selected and engaged voluntarily with the

activity according to the requirements defined by the blinded Ethics Committee.

The interviews were conducted online using the videoconferencing system

Blackboard Collaborate and recorded with the student’s consent.

Table 1 reports the main participants’ characteristics: degrees (CS, BA,

or MR), age, expertise in the field of study, and working status were other

relevant analysed characteristics. All participants had full-time jobs and

pursued new studies to improve their career prospects as fully online courses.

Despite their seniority as workers, some declared low expertise in the

discipline field, which they were approaching for the first time. As for

gender, twelve participants declared they were male, while nine declared they

were female. Moreover, almost all CS students were male (only one female), and

many BA and MR students were females (8 out of 11).

|

Student & Degree |

Age |

Expertise |

Gender |

Worker |

|

BA1 |

30–39 |

High |

Male |

Yes |

|

BA2 |

30–39 |

N.A. |

Male |

Yes |

|

BA3 |

40–49 |

High |

Male |

Yes |

|

BA4 |

40–49 |

Low |

Female |

Yes |

|

BA5 |

Less than 25 |

Low |

Female |

Yes |

|

BA6 |

30–39 |

High |

Female |

Yes |

|

BA7 |

40–49 |

Low |

Female |

Yes |

|

BA8 |

30–39 |

Low |

Female |

Yes |

|

BA9 |

30–39 |

Low |

Female |

Yes |

|

MR1 |

40–49 |

Low |

Female |

Yes |

|

MR2 |

Less than 25 |

Low |

Female |

Yes |

|

CS1 |

40–49 |

High |

Male |

Yes |

|

CS2 |

Less than 25 |

Low |

Male |

Yes |

|

CS3 |

40–49 |

High |

Female |

Yes |

|

CS4 |

40–49 |

High |

Male |

Yes |

|

CS5 |

50–60 |

High |

Male |

Yes |

|

CS6 |

30–39 |

Low |

Male |

Yes |

|

CS7 |

50–59 |

High |

Male |

Yes |

|

CS8 |

25–29 |

High |

Male |

Yes |

|

CS9 |

30–39 |

Low |

Male |

Yes |

|

CS10 |

30–39 |

Low |

Male |

Yes |

Table 1. Participants’ characteristics.

Acronyms adopted to characterise the degree: BA (Business Administration), MR

(Marketing and Market Research), CS (Computer Science).

3.3. Data analysis

method

The interview transcripts, duly revised by each researcher, were

analysed using NVivo software. Thematic Analysis (TA) was later applied with a

mixed deductive and inductive approach (called “codebook TA” by Braun et al., 2019). A set of codes was derived from the interview

guide, which was composed of the following seven questions:

●

Q1–Q4, including age, the field of

study, professional experience, motivation to study online, and academic self-efficacy;

●

Q5, about the student’s opinion of AI

systems overall, and the specific issue of captured data, as contextualisation

of blinded;

●

Q6, asking about the students’

expectations, interaction, and after-experience appraisal of blinded;

●

Q7, inviting students’ proposals for

improving blindness.

Data were coded from the original verbatim transcriptions in Spanish,

yielding a corpus of 21,761 words. Afterward, the data were read and segmented;

all relevant excerpts of the interviews addressing aspects related to the

interview scheme were marked and chosen for the analysis. A segment collected

comments, descriptions, or opinions related to any of the questions in the

interview, whether a single word, a phrase, or a longer text excerpt, thus

composing a subtheme. New subthemes were coded from the initial themes and some

logically complemented codes were added for specific codes upon researchers’

agreement, e.g., “High Expectations” was complemented with “Low Expectations”.

This operation led to 17 themes with 67 subthemes from the seven initial

themes, totalling 634 marked segments. If the segments included a reference

related to two subdimensions in a way that was not separable, the excerpt was

coded into both. This article is based on 11 themes and 52 subthemes, counting

396 coded excerpts over 12,046 words. The excluded themes dealt with issues

outside the scope of this work, such as the like/dislike of online education

and the motivations to pursue an online degree. The interview guide, the entire

code tree, a table with exemplar excerpts translated to English, and the

overall themes report in Spanish, extracted from NVivo and displaying the

interrater agreement exercise, have been published as Open Data (Raffaghelli, Loria-Soriano, et al., 2022).

Data in Spanish were analysed using a code tree in English, which was

discussed and enriched after coding two interviews as training. Two more codes

were added after coding four other interviews. Five codes created logically

(procedure above) were not used. The researchers translated and collected a

representative sample of codes (10% or n = 63 out of 634) for each

code and subcode to ensure the reliability of the analysis. The excerpts under

the codings were examined in a consensus meeting,

reaching a good agreement level for the themes (56/63, 89% agreement).

After consolidating the code tree with the themes that emerged, a

content analysis was carried out. Content analysis (Elo et al., 2014) is a

research method aimed at identifying, through quantitative means, the presence

of certain words, themes, or concepts within some given qualitative data (i.e.,

text). After coding and detecting the themes in a text, the researchers can

quantify and analyse such themes’ presence, meanings, and relationships. In our

approach, we adopted the NVivo tools for quantification and aggregation of

themes and subthemes, represented in columns of Tables 7–11 of the Supplementary Materials as:

●

analysing the presence of coded themes and

subthemes across the interviews (n.int)

●

representing comparatively the coverage of

themes and subthemes across interviews (%

cov.)

●

analysing the frequency and percentage of

codes per theme (Fr.codes, %

codes);

●

analysing the number and percentages of

words per theme and subtheme (n.words, % words);

●

using the coloured rows to analyse the

maximum theme representation across interviews (MTAI); the intercode

frequency (IF) or the code for a theme, including all the subthemes, relating

to the overall corpus; and representing comparatively the subtheme’s coverage

within a theme (% codes) as well as the coverage of words per subtheme (% words).

Overall, the frequency and comparisons of coded themes and subthemes

across interviews showed the topic’s relevance for several participants.

Meanwhile, the frequency and comparisons of codes and words were used to show

how densely the topic was represented across the participants and take the

corpus extracted as a discourse sample.

4. Results

4.1. RQ1: Thematic

and content analysis

At first sight, some themes got significant attention: the participants

were particularly talkative when referring to blinded characteristics. As

observed in Table 7 (see Supplementary Materials”), the themes relating

to the tool characteristics and UX (e.g., features such as the traffic lights,

the relevance for future students, their interest and understanding of the

tool) were covered on average in more than 52% of interviews. The specific subthemes

most represented, based on the number of coded segments, were: comments on the

emails sent by the intervention mechanism (42.03%, 16 interviews); the

understanding of the tool (51.11%, 11 interviews), the experience of the green

light (72.00%), which was the most frequent across 17 interviews; a high

interest (87.80%, 17 interviews) and potential relevance for future students

(81.58%, 14 interviews). In the students’ expressions: “I think the information –provided by blinded– is perfect. That is, what

is measured and what we as students can see is perfect, at least from my point

of view [BA3]”; “as a fairly good overview and fairly easy to understand,

especially the traffic light” [BA6]. Concerning the green light, all

students were active and mostly received a green low-risk level throughout the

course: “I think they were green in

general” [CS6].

Although they were much less frequent, 13.16% in five interviews pointed

to low relevance as part of the UX, and 4.88% in two interviews had a low

interest. In all cases, there were noticeable comments about a certain

discontent with the tool’s automated support: “Of course, I already know that I have met the deadlines, that I have

submitted the assignments, as well as what grades I received. Let’s say that

the prediction is pretty obvious” [CS10]. The comments were related to

good learners who did not see any at-risk lights (i.e., red or yellow): “I wouldn’t be able to tell you how exactly

it has helped me. The only thing I am currently doing is checking from time to

time if the light is green. That’s all” [CS4].

Nonetheless, beyond the attention given to making proposals, we also

found a certain diversification of the proposals with comments (see Table 8 of the Supplementary

Materials) on the panel display (36.36%, ten interviews); a tool able to

provide deeper insights in intervention messages (31.82%, 12 interviews); and

some attention to the overall design (10.61%, four interviews), the way the

prediction was generated (13.64%, five interviews) and the possibility of

access to a tutorial (7.58%, four interviews). For example, the students

indicated that they would add specific features, like “Maybe a graph? To see progress. Like ‘you have started here, you have

been gradually progressing and you are improving […]” [BA9]. They also

commented on deeper educational aspects: “I

would like to receive the professor’s comment about my work, other than

automatic comments because you can tell they are coming from the machine”

[CS1].

Also, data capture and usage in education got relevant attention with a

coverage of 61.90% (see Table 9

of the Supplementary Materials). For example, a student said “It is very important. I think that data

nowadays is one of the most important things, reading data to be able to

evaluate and to be able to make decisions and to be able to improve everything

for the student, and this type of tool can help us indirectly to be able to

pass the subject, which is our aim” [BA3], as a part of a proactive

approach to share data that can be opened (see subtheme “Open-Proactive”,

60.00%, 13 interviews). There were also more cautious voices: “Well then. Well, in the end, it’s moving

forward. Yes, I don’t see it as a bad thing, as long as

they are used in an appropriate way, without unlawful uses […]” [BA6] (see

subtheme “Open-Cautious”, 30.00%, eight interviews). Very few students agreed

with the idea of opening restricted-access data, and with precautionary

measures: “You explain perfectly, they

are using your data without you having given explicit and clearly informed

permission, unlike blinded” [BA9]. No students commented on the idea of

capturing restricted data for any purpose.

As observed in Table 10

of the Supplementary Materials, a theme that did not often appear in the

students’ responses was their expectations about being blinded (28.57%). If we

observe the specific expressions, the subtheme “LowExpectations”

was mainly represented within the six interviews in which it appeared. This is

an interesting element if considered together with the relatively good opinion

expressed as part of the UX, which could be deemed consistent with the

disconfirmation effect (Bhattacherjee &

Premkumar, 2004), although further intracase analysis

of this relationship should be necessary. It is worth commenting that the

students had little experience in AI systems. The subtheme AI experience was

covered in seven interviews for automated educational tools (40.00% of codes);

image processing in three (20.00%); recommender systems in seven (32.00%), and

chatbots as tutors in only two (8.00%).

Finally, we observed that the participants, all experienced workers and

primarily middle-aged, felt generally confident about their study methods (see Table 11 of the Supplementary

Materials). They expressed high or very high confidence (15 interviews) in

52.38% of the overall discourse. Nonetheless, expressions highlighting less

confidence were also present, but to a lesser extent (22.86% in three

interviews, neither low nor high, and 25.71% in five interviews, expressed low

confidence). Confidence is an interesting personal trait that can be considered

when understanding the approach and acceptance of AI tools. Cross-tabulating

data, we expected to see expressions of less confidence co-occurring with less

openness to accept new and unknown technological tools.

4.2. RQ2:

Cross-Tabulation

To grasp the nuances of the students’ experience with the system, we

decided to analyse the discourse considering the different students’ fields of

study. Therefore, we cross-tabulated the results for the relevant themes that

emerged.

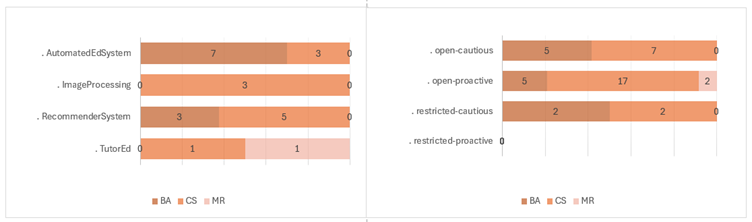

Figure 2. AI experience and Data capture and usage by field.

Overall, we observed that the CS students had a more diversified

experience of EdAI systems relating to their peers

from BA-MR (Figure 2, left).

Also, they were more positive concerning data capture and usage (Figure 2, right), and they

generally had higher self-efficacy in academic tasks (Figure 3, left). The categories overlapping must be noticed,

with 9 out of 10 CS students being males and BA-MR prevalently females (8 out

of 11 cases). We cross-tabulated gender and self-efficacy to study this

phenomenon further, and we confirmed that males across disciplines expressed a

higher self-efficacy (“High” with 3 BA and 8 CS, and “Very-High” with 2 CS)

than their female peers (“High” with 3 BA, and “Very-High” with 2 BA). In their

words: “I like the subject (but) I have

had to get a private teacher to help me […] because it is impossible, not even

with a thousand tutorials could make it and it is getting very hard for me”

[MR1]. “I try when I have a holiday at work, I spend the whole morning, all the

time I have free in front of the computer, looking at notes and applying them,

doing exercises, trying to apply everything […] to see if I can assimilate (the

course’s content)” [BA9]. Still, the male students from CS were the only

group to express low self-efficacy (4/9 codes for gender and 35 coded segments

on self-efficacy), highlighting the possibility that the perceived difficulty

of the subject studied also has implications for self-efficacy.

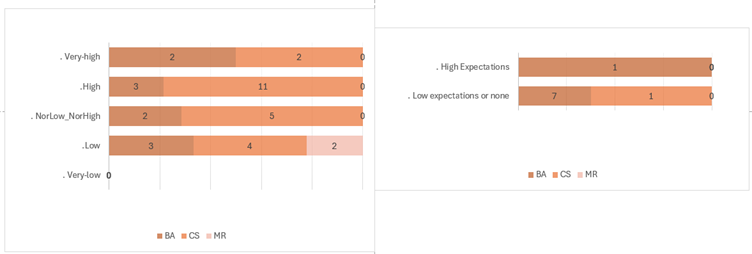

Figure 3. Self-efficacy and

Expectations by field.

Concerning their expectations, independently of the student’s

background, they did not clearly envision blinded nor consider it relevant at

the beginning (Figure 3, right).

However, after the experience, we observed that those taking part in most of

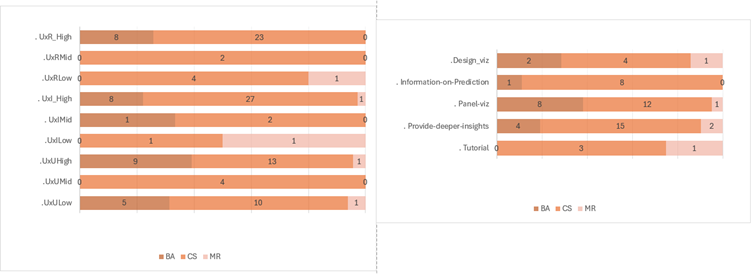

the interaction (Figure 4, left)

were the CS students who showed a higher interest (UXI prefix, 24/36 codes) and

saw more relevance (UXR prefix, 18/31 codes) in their experience of the tool.

Nevertheless, the BA students displayed good values concerning the UX within

their own group, with high relevance (13/13 codes in that category) and

interest (11/12 codes).

Understanding the tool was a bit more controversial in all fields. BA

students show a high understanding with ten codes on one hand, but they also

express a low understanding with six codes (16 in total). The situation is

similar for the CS students, with 12/21 codes for high understanding and 9/21

for low understanding. Overall, the students tended to refer more to a high or

middle understanding (27 coded segments of 43, 62.79%) than to a low

understanding (16/43, 37.20%). For example, a CS student referred to a high

understanding describing the functions and their impact: “And they also tell me the points where I’m doing better […] Well, the

tool showed that I did the minimum required. So I

think OK, today I can improve. It’s like direct feedback, without having to

constantly bother the teacher” [CS2]. But low understanding is also

referred to by both CS and BA-MR students “If

it is a totally new application, then it does give you some idea of at least

the positioning to know what I’m going to find” [CS10]; “What I didn’t quite understand, is the

bottom part of the tool that I had, like the note that appeared like it was

always on the first CAA, and I didn’t understand how to changing it, or if it

could be changed or if it was a graphic in general” [BA8].

Figure 4. Relevance, interest,

understanding and Proposals by field.

Finally, the students were highly proactive in making proposals and

suggestions to improve the tool (covered in 12 interviews), as Figure 4 (right) shows. However,

the crosstabs revealed a prevalence of suggestions from CS (42 coded segments

out of 62, 67.74%) and male students (38/62, 61.29%). Particularly, they made

suggestions in several categories: integrating a tutorial (3/4) to provide

deeper insights (15/21); changes to the panel visualisation (12/21); enriching

the source of prediction (8/9); and improvements to the overall blinded design

(4/7). It is interesting to see, nonetheless, that both CS and BA-MR students

made suggestions relating to the tool’s appearance and impact on the learning

process: “I would add one thing [...] not

only the tool should tell you if you’ve not logged in to the forum, but it

should also look at the interactions that you have on the forum, because I, for

example, log in a lot, I read everything, but I interact very little. So maybe

interacting can be useful to promote better learning” [CS10]. “I would add an orientation like when you are

like ‘I don’t know which way to go or how you would recommend it’, to see how I

have evolved or how different students have done, how they have done one thing

or another” [BA7]. Instead, only the CS students were more concerned about

how the tool worked and how the data captured could be used more effectively: “Talking about trying to incorporate as many

parameters as possible to give a prediction, that could be maybe a bit more

concrete and more useful. Because the comments I got were keep working, so you’re

going to do well in the subject […] but I need more” [CS8]. In this regard,

males’ and females’ suggestions tended to coincide with more suggestions for

the panel visualisation (11 female-coded segments compared to ten from males)

and provide deeper insights (8 and 13 respectively). Consistently with the

subject field, only males referred to the predictive system (9 coded segments

out of 62).

5. Discussion and conclusion

EdAI systems like EWS are becoming more common in

HE, usually studied in development settings instead of analysing the students’

reactions (Ferguson et al., 2016) and Bodily & Verbert

(2017). Our investigation analysed students’ perspectives on EdAI tools integrated with the learning-teaching process in

undergraduate courses at a fully online university. Through 21 semi-structured

interviews, the researchers explored students’ experiences regarding the tool,

expectations, and suggestions for improvement. The results revealed that

students, mostly senior workers with good academic self-efficacy, had little

experience with EWS on artificial intelligence systems in education and low

expectations. The usage experience triggered interest and reflection on the EWS

tool and data usage. This enriched the participants, prompting interest and

supporting them to reflect on the EWS features and design.

Regarding RQ1, we observed relevant insights. First, the analysis

corroborates the findings of (Raffaghelli, Rodríguez,

et al., 2022) about the disconfirmation effect (Bhattacherjee

& Premkumar, 2004). This also reveals an inverse relationship between

students’ expectations about technology and their acceptance behavior. This is relevant when considering the positive UX

opinions expressed by students (as in Hu et al. (2014)). Thus, as stated in

Akhtar et al. (2017) and van Brummelen et al. (2021), using EdAI

tools implies changing perceptions and opinions.

In response to the collected data, the students’ perspectives on data

privacy reflected the growing societal awareness of the issue (Prinsloo et al.,

2022). A notable finding was that a majority of

students expressed little concern about the use of their data, particularly if

it contributed to their well-being or technological progress. This finding was

different from Bisdas et al. (2021), where the

students reported ethical concerns about privacy and algorithmic control over

the data, indicating a potential influence of the participants’ medical

training background on their views. Our results align with existing literature,

such as Bochniarz et al. (2022) and Kerr et al.

(2020), which highlights the connection between knowledge and attitudes towards

Educational AI (EdAI), along with the associated

feelings of distrust or trust (Ghotbi et al., 2022).

However, as in Akhtar et al. (2017) and Guggemos et al. (2020), we also showed

how exposure to an EdAI could trigger the students’

engagement in making concrete proposals to shape their relationship between

humans and technological-educational agents.

Students’ suggestions on the panel display and intervention messages

showed misunderstandings about the panel’s usefulness because all students

received a low-risk level (i.e., green traffic lights). A similar limitation

was observed in intervention messages since students only received appraisal

messages. Positive effects were found in other studies related to the EWS

opinion about messages (Arnold & Pistilli, 2012; Bañeres

et al., 2021; Raffaghelli, Rodríguez et al., 2022) on

at-risk students who received recommendations and guidelines (Seo et al.,

2021), but not in this work. For this reason, students requested training and

tutorials to better understand the EdAI (Kim et al.,

2020).

Concerning RQ2, the study analysed gender differences (more males in CS

and more females in BA-MR) in the acceptance of EdAI

tools among different disciplines. CS students showed higher confidence and

expectation in the system, while BA-MR students were less confident in its

usage. Low perception of usefulness and ease of use may affect usage (Chen et

al., 2021; Rienties et al., 2018). However, EdAI acceptance is based on the perceived knowledge of the

tool (Gado et al., 2022), which might be the case for the female participants.

Therefore, students’ perceptions of the instrument seem deeply rooted in their

understanding and needs as students and future professionals. CS students’

deeper understanding of the system leads to more engagement and curiosity.

However, BA-MR students converged with CS peers in discussing features that

support academic activities.

The lower self-efficacy in academic tasks displayed in our study by the

female students might also be caused by lower self-efficacy in relation to

technologies (González-Pérez et al., 2020; Sáinz

& Eccles, 2012; Zander et al., 2020). On the other hand, females with

higher self-efficacy are more engaged and critical about tools supporting their

studies.

BA-MR students have a “reactive

and cautious” opinion about data capture, while CS students are more focused on

the benefits of data sharing. This may be due to their knowledge of internal

EWS operations and their scepticism about the potential benefits.

This study has significant limitations. There were only 21 self-selected

white students from the Global North, and their post-digital positioning may

have been influenced by positive experiences in technological settings.

Moreover, our research also needs to consider differences across disciplines

and gender. The study found that students with better performance and a

preference for innovative learning tools received low-risk predictions, but the

study did not provide insights into at-risk students (Akhtar et al., 2017).

Gender enrolment also influenced the findings, with some findings more related

to disciplines than gender.

Overall, these results emphasize the importance of supporting students’

understanding and experiences of EdAI systems to

participate in the development of these tools, aiming for quality, relevance,

and fairness. Teachers’ perspectives are also crucial, as seen in Krumm et al.

(2014) and Plak et al. (2022). Moreover, as

Buckingham-Shum (2019) highlights, the debate on data usage should be opened to

both students and teachers to ensure they feel empowered by using EdAI tools. Prinsloo et al. (2022) propose a complex

approach to data privacy, focusing on alternative understandings of personal

data privacy and their implications for technological solutions. However,

understanding central and alternative approaches to data privacy is crucial for

an ethical approach to EdAI tools (and in our

specific case, to the EWS). This idea is based on “postdigital

positionings,” which refer to the unique way individuals relate to the emerging

technological landscape (Hayes, 2021, p. 49). Therefore, empirical studies

should consider students’ lived experiences, teachers’ experiences, and

intersubjective perspectives on technology (Pedró et

al., 2019; Rienties et al., 2018). This spectrum

requires further exploration, to understand the impacts of EdAI,

the social and educational implications, and the balance between the design,

development, and human impact of EdAI.

References

Akhtar, S., Warburton, S., & Xu, W. (2017). The use of an online learning and teaching system for monitoring computer-aided design student participation and predicting student success. International Journal of Technology and Design Education, 27(2). https://doi.org/10.1007/s10798-015-9346-8

Arnold, K. E., & Pistilli, M. D. (2012). Course signals at Purdue: Using learning analytics to increase student success. ACM International Conference Proceeding Series, 267–270. https://doi.org/10.1145/2330601.2330666

Bañeres, D., Karadeniz, A., Guerrero-Roldán, A.-E., & Elena Rodríguez, M. (2021). A Predictive System for Supporting At-Risk Students’ Identification. In K. Arai, S. Kapoor, & R. Bhatia (Eds.), Proceedings of the Future Technologies Conference (FTC) 2020, Volume 1 (Vol. 1288, pp. 891–904). Springer International Publishing. https://doi.org/10.1007/978-3-030-63128-4_67

Bañeres, D., Rodríguez, M. E., Guerrero-Roldán, A. E., & Karadeniz, A. (2020). An Early Warning System to Detect At-Risk Students in Online Higher Education. Applied Sciences, 10(13), 4427. https://doi.org/10.3390/app10134427

Bhattacherjee, A., & Premkumar, G. (2004). Understanding changes in belief and attitude toward information technology usage: A theoretical model and longitudinal test. MIS Quarterly: Management Information Systems, 28(2), 229–254. https://doi.org/10.2307/25148634

Bisdas, S., Topriceanu, C. C., Zakrzewska, Z., Irimia, A. V., Shakallis, L., Subhash, J., Casapu, M. M., Leon-Rojas, J., Pinto dos Santos, D., Andrews, D. M., Zeicu, C., Bouhuwaish, A. M., Lestari, A. N., Abu-Ismail, L., Sadiq, A. S., Khamees, A., Mohammed, K. M. G., Williams, E., Omran, A. I., … Ebrahim, E. H. (2021). Artificial Intelligence in Medicine: A Multinational Multi-Center Survey on the Medical and Dental Students’ Perception. Frontiers in Public Health, 9. https://doi.org/10.3389/fpubh.2021.795284

Bochniarz, K. T., Czerwiński, S. K., Sawicki, A., & Atroszko, P. A. (2022). Attitudes to AI among high school students: Understanding distrust towards humans will not help us understand distrust towards AI. Personality and Individual Differences, 185. https://doi.org/10.1016/j.paid.2021.111299

Bodily, R., & Verbert, K. (2017). Review of research on student-facing learning analytics dashboards and educational recommender systems. IEEE Transactions on Learning Technologies, 10(4). https://doi.org/10.1109/TLT.2017.2740172

Bogina, V., Hartman, A., Kuflik, T., & Shulner-Tal, A. (2022). Educating Software and AI Stakeholders About Algorithmic Fairness, Accountability, Transparency and Ethics. International Journal of Artificial Intelligence in Education, 32(3). https://doi.org/10.1007/s40593-021-00248-0

Bozkurt, A., Xiao, F., Lambert, S., Pazurek, A., Crompton, H., Koseoglu, S., Farrow, R., Bond, M., Nerantzi, C., Honeychurch, S., Bali, M., Dron, J., Mir, K., Stewart, B., Stewart, B., Costello, E., Mason, J., Stracke, C., Romero-Hall, E., & Jandric, P. (2023). Speculative Futures on ChatGPT and Generative Artificial Intelligence (AI): A Collective Reflection from the Educational Landscape. Asian Journal of Distance Education, 18, 53–130 [Journal Article, Zenodo]. https://doi.org/10.5281/zenodo.7636568

Braun, V., Clarke, V., Hayfield, N., & Terry, G. (2019). Thematic analysis. Handbook of Research Methods in Health Social Sciences, 843–860. https://doi.org/10.1007/978-981-10-5251-4_103/COVER

Buckingham-Shum, S. J. (2019). Critical data studies, abstraction and learning analytics: Editorial to Selwyn’s LAK keynote and invited commentaries. Journal of Learning Analytics, 6(3). https://doi.org/10.18608/jla.2019.63.2

Casey, K., & Azcona, D. (2017). Utilizing student activity patterns to predict performance. International Journal of Educational Technology in Higher Education, 14(1). https://doi.org/10.1186/s41239-017-0044-3

Chen, M., Siu-Yung, M., Chai, C. S., Zheng, C., & Park, M. Y. (2021). A Pilot Study of Students’ Behavioral Intention to Use AI for Language Learning in Higher Education. Proceedings - 2021 International Symposium on Educational Technology, ISET 2021. https://doi.org/10.1109/ISET52350.2021.00045

Chikobava, M., & Romeike, R. (2021). Towards an Operationalization of AI acceptance among Pre-service Teachers. ACM International Conference Proceeding Series. https://doi.org/10.1145/3481312.3481349

Chocarro, R., Cortiñas, M., & Marcos-Matás, G. (2021). Teachers’ attitudes towards chatbots in education: a technology acceptance model approach considering the effect of social language, bot proactiveness, and users’ characteristics. Educational Studies. https://doi.org/10.1080/03055698.2020.1850426

Demir, K., & Güraksın, G. E. (2022). Determining middle school students’ perceptions of the concept of artificial intelligence: A metaphor analysis. Participatory Educational Research, 9(2). https://doi.org/10.17275/per.22.41.9.2

Dreyfus, H. L. (2007). Why Heideggerian AI failed and how fixing it would require making it more Heideggerian. Artificial Intelligence, 171(18). https://doi.org/10.1016/j.artint.2007.10.012

Elo, S., Kääriäinen, M., Kanste, O., Pölkki, T., Utriainen, K., & Kyngäs, H. (2014). Qualitative Content Analysis: A Focus on Trustworthiness. SAGE Open, 4(1). https://doi.org/10.1177/2158244014522633

Ferguson, R., Brasher, A., Clow, D., Cooper, A., Hillaire, G., Mittelmeier, J., Rienties, B., Ullmann, T., & Vuorikari, R. (2016). Research Evidence on the Use of Learning Analytics: implications for education Policy. https://doi.org/10.2791/955210

Floridi, L. (2023). The Ethics of Artificial Intelligence. Principles, Challenges, and Opportunities. Oxford University Press. https://global.oup.com/academic/product/the-ethics-of-artificial-intelligence-9780198883098?cc = it&lang = en&#

Freitas, R., & Salgado, L. (2020). Educators in the loop: Using scenario simulation as a tool to understand and investigate predictive models of student dropout risk in distance learning. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 12217 LNCS. https://doi.org/10.1007/978-3-030-50334-5_17

Gado, S., Kempen, R., Lingelbach, K., & Bipp, T. (2022). Artificial intelligence in psychology: How can we enable psychology students to accept and use artificial intelligence? Psychology Learning and Teaching, 21(1). https://doi.org/10.1177/14757257211037149

Gallagher, S. (2014). Phenomenology | The Encyclopedia of Human-Computer Interaction, 2nd Ed. https://www.interaction-design.org/literature/book/the-encyclopedia-of-human-computer-interaction-2nd-ed/phenomenology

Ghotbi, N., Ho, M. T., & Mantello, P. (2022). Attitude of college students towards ethical issues of artificial intelligence in an international university in Japan. AI and Society, 37(1). https://doi.org/10.1007/s00146-021-01168-2

González-Pérez, S., Mateos de Cabo, R., & Sáinz, M. (2020). Girls in STEM: Is It a Female Role-Model Thing? Frontiers in Psychology, 11. https://doi.org/10.3389/fpsyg.2020.02204

Greenland, S. J., & Moore, C. (2022). Large qualitative sample and thematic analysis to redefine student dropout and retention strategy in open online education. British Journal of Educational Technology, 53(3). https://doi.org/10.1111/bjet.13173

Guggemos, J., Seufert, S., & Sonderegger, S. (2020). Humanoid robots in higher education: Evaluating the acceptance of Pepper in the context of an academic writing course using the UTAUT. British Journal of Educational Technology, 51(5), 1864–1883. https://doi.org/10.1111/bjet.13006

Gutiérrez, F., Seipp, K., Ochoa, X., Chiluiza, K., de Laet, T., & Verbert, K. (2020). LADA: A learning analytics dashboard for academic advising. Computers in Human Behavior, 107. https://doi.org/10.1016/j.chb.2018.12.004

Hayes, S. (2021). Postdigital Positionality. Developing Powerful Inclusive Narratives for Learning, Teaching, Research and Policy in Higher Education. Brill. https://brill.com/view/title/57466?language = en

Holmes, W., Porayska-Pomsta, K., Holstein, K., Sutherland, E., Baker, T., Shum, S. B., Santos, O. C., Rodrigo, M. T., Cukurova, M., Bittencourt, I. I., & Koedinger, K. R. (2022). Ethics of AI in Education: Towards a Community-Wide Framework. International Journal of Artificial Intelligence in Education, 32(3). https://doi.org/10.1007/s40593-021-00239-1

Hu, Y. H., Lo, C. L., & Shih, S. P. (2014). Developing early warning systems to predict students’ online learning performance. Computers in Human Behavior, 36, 469–478. https://doi.org/10.1016/j.chb.2014.04.002

Jalalov, D. (2023, March 9th). L’evoluzione dei chatbot da T9-Era e GPT-1 a ChatGPT. (The evolution of chatbots from T9-Era and GPT-1 to ChatGPT.) [Blog]. Metaverse Post. https://mpost.io/it/l%27evoluzione-dei-chatbot-dall%27era-t9-e-gpt-1-a-chatgpt/

Jivet, I., Scheffel, M., Drachsler, H., & Specht, M. (2017). Awareness is not enough: Pitfalls of learning analytics dashboards in the educational practice. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 10474 LNCS. https://doi.org/10.1007/978-3-319-66610-5_7

Jivet, I., Scheffel, M., Schmitz, M., Robbers, S., Specht, M., & Drachsler, H. (2020). From students with love: An empirical study on learner goals, self-regulated learning and sense-making of learning analytics in higher education. Internet and Higher Education, 47. https://doi.org/10.1016/j.iheduc.2020.100758

Kabathova, J., & Drlik, M. (2021). Towards predicting student’s dropout in university courses using different machine learning techniques. Applied Sciences (Switzerland), 11(7). https://doi.org/10.3390/app11073130

Kerr, A., Barry, M., & Kelleher, J. D. (2020). Expectations of artificial intelligence and the performativity of ethics: Implications for communication governance. Big Data and Society, 7(1). https://doi.org/10.1177/2053951720915939

Kim, J., Merrill, K., Xu, K., & Sellnow, D. D. (2020). My Teacher Is a Machine: Understanding Students’ Perceptions of AI Teaching Assistants in Online Education. International Journal of Human-Computer Interaction, 36(20). https://doi.org/10.1080/10447318.2020.1801227

Kim, J. W., Jo, H. I., & Lee, B. G. (2019). The Study on the Factors Influencing on the Behavioral Intention of Chatbot Service for the Financial Sector: Focusing on the UTAUT Model. Journal of Digital Contents Society, 20(1), 41–50. https://doi.org/10.9728/dcs.2019.20.1.41

Krumm, A. E., Waddington, R. J., Teasley, S. D., & Lonn, S. (2014). A learning management system-based early warning system for academic advising in undergraduate engineering. In J. A. Larusson & B. White (Eds.), Learning Analytics: From Research to Practice (pp. 103–119). https://doi.org/10.1007/978-1-4614-3305-7_6

Lund, B., & Wang, T. (2023). Chatting about ChatGPT: How may AI and GPT impact academia and libraries? Library Hi Tech News, 40. https://doi.org/10.1108/LHTN-01-2023-0009

Liz-Domínguez, M., Caeiro-Rodríguez, M., Llamas-Nistal, M., & Mikic-Fonte, F. A. (2019). Systematic literature review of predictive analysis tools in higher education. Applied Sciences (Switzerland), 9 (24). https://doi.org/10.3390/app9245569

Makridakis, S. (2017). The forthcoming artificial intelligence (AI) revolution: Its impact on society and firms. Futures, 90, 46-60. https://doi.org/10.1016/j.futures.2017.03.006

Ng, D. T. K., Leung, J. K. L., Su, J., Ng, R. C. W., & Chu, S. K. W. (2023). Teachers’ AI digital competencies and twenty-first century skills in the post-pandemic world. Educational Technology Research and Development, 71(1), 137–161. https://doi.org/10.1007/s11423-023-10203-6

Ortigosa, A., Carro, R. M., Bravo-Agapito, J., Lizcano, D., Alcolea, J. J., & Blanco, Ó. (2019). From Lab to Production: Lessons Learnt and Real-Life Challenges of an Early Student-Dropout Prevention System. IEEE Transactions on Learning Technologies, 12(2), 264–277. https://doi.org/10.1109/TLT.2019.2911608

OpenAI. (2022, November 30th). Introducing ChatGPT [Blog]. Openai.Com. https://openai.com/blog/chatgpt

Pedró, F., Subosa, M., Rivas, A., & Valverde, P. (2019). Artificial intelligence in education: challenges and opportunities for sustainable development. Working Papers on Education Policy, 7.

Pichai, S. (2023, February 6th). Google AI updates: Bard and new AI features in Search [Blog]. Google.com https://blog.google/technology/ai/bard-google-ai-search-updates/

Pintrich, P. R. (2000). An Achievement Goal Theory Perspective on Issues in Motivation Terminology, Theory, and Research. Contemporary Educational Psychology, 25(1). https://doi.org/10.1006/ceps.1999.1017

Plak, S., Cornelisz, I., Meeter, M., & van Klaveren, C. (2022). Early warning systems for more effective student counselling in higher education: Evidence from a Dutch field experiment. Higher Education Quarterly, 76(1). https://doi.org/10.1111/hequ.12298

Popenici, S. A. D., & Kerr, S. (2017). Exploring the impact of artificial intelligence on teaching and learning in higher education. Research and Practice in Technology Enhanced Learning, 12(1). https://doi.org/10.1186/s41039-017-0062-8

Prinsloo, P. (2019). A social cartography of analytics in education as performative politics. British Journal of Educational Technology, 50(6). https://doi.org/10.1111/bjet.12872

Prinsloo, P.,

Slade, S., & Khalil, M. (2022). The answer is (not only) technological:

Considering student data privacy in learning analytics. British Journal of Educational Technology, 53(4), 876–893. https://doi.org/10.1111/BJET.13216

Qin, F., Li, K., & Yan, J. (2020). Understanding user trust in artificial intelligence-based educational systems: Evidence from China. British Journal of Educational Technology, 51(5), 1693–1710. https://doi.org/10.1111/bjet.12994

Raffaghelli, J. E., Loria-Soriano, E., González, M. E. R., Bañeres, D., & Guerrero-Roldán, A. E. (2022). Extracted and Anonymised Qualitative Data on Students’ Acceptance of an Early Warning System [dataset]. Zenodo. https://doi.org/10.5281/ZENODO.6841129

Raffaghelli, J. E., Rodríguez, M. E., Guerrero-Roldán, A.-E., & Bañeres, D. (2022). Applying the UTAUT model to explain the students’ acceptance of an early warning system in Higher Education. Computers & Education, 182, 104468. https://doi.org/10.1016/j.compedu.2022.104468

Rienties, B., Herodotou, C., Olney, T., Schencks, M., & Boroowa, A. (2018). Making sense of learning analytics dashboards: A technology acceptance perspective of 95 teachers. International Review of Research in Open and Distance Learning, 19(5), 187–202. https://doi.org/10.19173/irrodl.v19i5.3493

Sáinz, M., & Eccles, J. (2012). Self-concept of computer and math ability: Gender implications across time and within ICT studies. Journal of Vocational Behavior, 80(2). https://doi.org/10.1016/j.jvb.2011.08.005

Scherer, R., & Teo, T. (2019). Editorial to the special section—Technology acceptance models: What we know and what we (still) do not know. In British Journal of Educational Technology (Vol. 50, Issue 5, pp. 2387–2393). Blackwell Publishing Ltd. https://doi.org/10.1111/bjet.12866

Selwyn, N. (2019). What’s the problem with learning analytics? Journal of Learning Analytics, 6(3). https://doi.org/10.18608/jla.2019.63.3

Seo, K., Tang, J., Roll, I., Fels, S., & Yoon, D. (2021). The impact of artificial intelligence on learner–instructor interaction in online learning. International Journal of Educational Technology in Higher Education, 18(1). https://doi.org/10.1186/s41239-021-00292-9

Tlili, A., Shehata, B., Adarkwah, M. A., Bozkurt, A., Hickey, D. T., Huang, R., & Agyemang, B. (2023). What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learning Environments, 10(1), 15. https://doi.org/10.1186/s40561-023-00237-x

Tzimas, D., & Demetriadis, S. (2021). Ethical issues in learning analytics: a review of the field. Educational Technology Research and Development, 69(2). https://doi.org/10.1007/s11423-021-09977-4

Valle, N., Antonenko, P., Dawson, K., & Huggins-Manley, A. C. (2021). Staying on target: A systematic literature review on learner-facing learning analytics dashboards. In British Journal of Educational Technology (Vol. 52, Issue 4). https://doi.org/10.1111/bjet.13089

van Brummelen, J., Tabunshchyk, V., & Heng, T. (2021). Alexa, Can I Program You?”: Student Perceptions of Conversational Artificial Intelligence before and after Programming Alexa. Proceedings of Interaction Design and Children, IDC 2021. https://doi.org/10.1145/3459990.3460730

Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly: Management Information Systems, 27(3), 425–478. https://doi.org/10.2307/30036540

Xing, W., Chen, X., Stein, J., & Marcinkowski, M. (2016). Temporal predication of dropouts in MOOCs: Reaching the low hanging fruit through stacking generalization. Computers in Human Behavior, 58, 119–129. https://doi.org/10.1016/j.chb.2015.12.007

Xu, J. J., & Babaian, T. (2021). Artificial intelligence in business curriculum: The pedagogy and learning outcomes. International Journal of Management Education, 19(3). https://doi.org/10.1016/j.ijme.2021.100550

Zander, L., Höhne, E., Harms, S., Pfost, M., & Hornsey, M. J. (2020). When Grades Are High but Self-Efficacy Is Low: Unpacking the Confidence Gap Between Girls and Boys in Mathematics. Frontiers in Psychology, 11, 2492. https://doi.org/10.3389/fpsyg.2020.552355

Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education – where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 1–27. https://doi.org/10.1186/s41239-019-0171-0

Zhou, Y., Zhao, J., & Zhang, J. (2020). Prediction of learners’ dropout in E-learning based on the unusual behaviors. Interactive Learning Environments. https://doi.org/10.1080/10494820.2020.1857788

Zimmerman, B. J. (2008). Investigating self-regulation and motivation: Historical background, methodological developments, and future prospects. American Educational Research Journal, 45(1). https://doi.org/10.3102/0002831207312909